AI-enabled computational imaging technology enables high-quality infrared imaging using simple and compact optical systems. However, the reliance on specialized image reconstruction algorithms introduces additional detection delays and significantly increases computational and power demands. These factors pose serious challenges for deploying computational imaging in high-speed, low-power scenarios such as drone-based remote sensing and biomedical diagnostics. Although neural network model compression is a key strategy for accelerating inference on edge devices, conventional approaches primarily focus on reducing network complexity and the number of multiply–accumulate operations (MACs). These methods often overlook the unique constraints of computational imaging and the hardware-specific requirements of edge AI chips, resulting in only limited gains in practical efficiency for edge deployment of computational imaging systems.

In response to these challenges, Xuqun Wang, Yujie Xing, and collaborators from the team led by Professors Zhanshan Wang and Xinbin Cheng at the School of Physics and Engineering, Tongji University, proposed an edge AI-accelerated reconstruction strategy tailored to the hardware characteristics of computational imaging systems. Their approach integrates operator-adaptive reconstruction, sensitivity-based pruning, and hybrid quantization techniques on edge neural network chips, achieving an efficient balance between reconstruction speed and image quality for single-lens infrared computational imaging cameras. In June 2025, their findings were published online in Advanced Imaging, a leading journal in the field of computational imaging, under the title "Edge Accelerated Reconstruction Using Sensitivity Analysis for Single-Lens Computational Imaging."

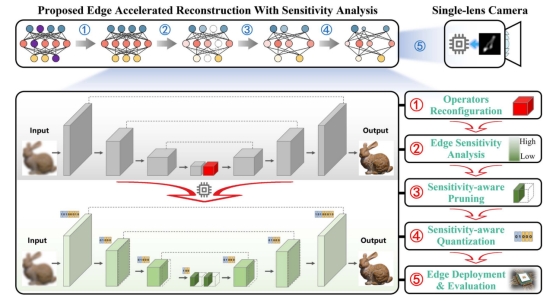

Fig 1. Edge-accelerated reconstruction strategy for single-lens infrared computational imaging based on sensitivity analysis

The end-to-end sensitivity analysis-based edge-accelerated reconstruction method proposed in this work for single-lens computational imaging systems is illustrated in Fig 1. This strategy is designed to optimize the real-world performance of the reconstruction algorithm on AI chips by achieving an efficient balance between image restoration quality and network inference speed through lightweight compression of the reconstruction network deployed on NPU hardware. To ensure optimal compatibility between the AI chip architecture and the reconstruction algorithm, the study first performs an operator adaptability analysis of the chip, along with pruning and quantization sensitivity analysis of different network modules. Guided by both the chip’s computational characteristics and the sensitivity profile of the network, a tailored model compression strategy is then implemented to enhance edge deployment performance.

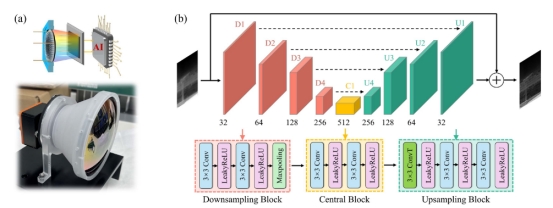

Fig 2. (a) Prototype of the single-lens infrared computational camera used in this work. (b) Architecture of the original network used in this work.

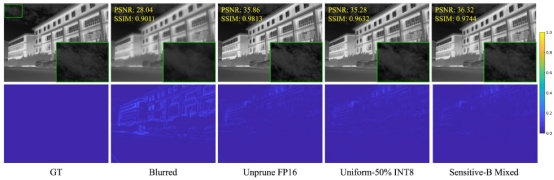

Fig 3. Experimental results of reconstructed images for different models

The edge acceleration strategy proposed in this study achieves real-time image reconstruction at over 25 FPS while maintaining reconstruction quality comparable to the original model. In contrast to pruning and quantization schemes that lack hardware-aware optimization, the proposed method leverages joint hardware–software co-optimization to strike an effective balance between performance and computational efficiency. This approach paves the way for lightweight, low-latency computational imaging applications in domains such as UAV-based optical sensing and in situ medical diagnostics.

Tongji University is the primary affiliation for this work. The paper is co-corresponded by Professor Xinbin Cheng and Associate Professor Xiong Dun, both from Tongji University. The co-first author is Assistant Professor Wang Xuquan and Yujie Xing, also from Tongji University. Other key contributors include Professor Zhanshan Wang, and PhD candidate Ziyu Zhao.

Paper link: http://dx.doi.org/10.3788/AI.2025.10003