Recently, team of Professor Zhanshan Wang and Professor Xinbin Cheng from IPOE proposed an automatic classification method for hyperspectral images based on principal component analysis (PCA) and VGG (PCA-VGG). This method utilizes the characteristic spectral distillation method to convert the original hyperspectral image into a spectral-spatial feature image, and combined with a fine-tuned VGG model, achieves an accuracy of 88.0% in cricket species, while balancing real-time performance and hyperspectral computing power requirements. The results were published in Optics & Laser Technology with the title " Visible-NIR hyperspectral imaging based on characteristic spectral distillation used for species identification of similar crickets".

Insects are the most diverse animal group on Earth and have important research value in ecological research, environmental protection, and agricultural production. Rapid and accurate identification of insect species has been an urgent need in field biodiversity research for a long time. Traditional methods based on visual observation and molecular genetic markers cannot achieve a balance between accuracy and real-time performance. In recent years, computer vision methods based on deep learning have been widely used for automatic insect classification, but there is still a problem of low accuracy when dealing with insect species with similar appearances. Hyperspectral imaging (HSI) technology can obtain the geometric shape and spectral fingerprint of targets, providing richer target characteristic information, which is expected to be used for real-time classification of insect species. However, the massive data volume and computing power requirements of hyperspectral data cubes pose significant challenges for real-time processing of portable devices, which to some extent limit the promotion and application of online analysis scenarios.

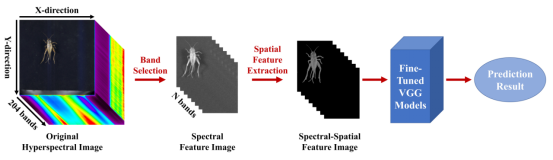

Figure 1. Preprocessing of HSI and automatic classification of insect species based on feature spectral distillation.

In the species identification of insects, the cricket species belonging to the genus Teleogryllus have always been a difficult problem due to their high similarity in appearance. This study used the PCA-VGG automatic classification model and attempted to identify three closely related species of cricket, namely Teleogryllus emma, T. occipitalis, and T. mitratus Figure 1 shows the preprocessing of HSI data and the automatic classification process of crickets. Due to the large amount of redundant information in the original cricket hyperspectral dataset, we used PCA band selection algorithm to extract feature bands and K-means algorithm to obtain spatial features of cricket samples. Then, the spectral-spatial feature images of crickets are placed into a fine-tuned VGG model, and the final prediction results of the test set are output.

Figure 2. (a) T.emma sample; (b) The confusion matrix of PCA-VGG-8 model; (c) The confusion matrix of RGB image model.

Compared with models based on full spectrum image datasets, RGB image datasets, and spectrum datasets, the PCA-VGG model significantly improves classification accuracy by selecting appropriate feature bands. In the PCA-VGG model based on spectral-spatial feature images (Figure 2(b)), the accuracy of insect identification results is as high as 88.0%, far higher than the classification results based on RGB images (Figure 2 (c)). By combining model compression and edge deployment methods, our proposed model can achieve an inference speed of 5.02 ms, fully demonstrating the superiority and enormous potential of this method in the field of rapid species identification. In future species identification tasks, based on the feature spectral distillation method proposed in this paper, it is expected to conduct large-scale identification of different insect species in a very short period of time, laying a technical foundation for efficient species census and ecological research.

Assistant Professor Xuquan Wang (from Tongji University) and Associate Professor Zhuqing He (from East China Normal University) are corresponding authors of the paper. Zhiyuan Ma (from Tongji University) and Mi Di (from East China Normal University) are co first authors of the paper. Collaborators with outstanding contributions to the paper also include Professor Jian Zhang (from East China Normal University), Tianhao Hu (from East China Normal University).

Information of the paper:

https://doi.org/10.1016/j.optlastec.2025.112420